23.3 Standard Error and Prediction Intervals

This section describes the key statistical concepts and calculations used within TreeAge Pro.

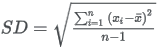

Standard Deviation and Standard Error

-

Standard deviation (SD) measures the amount of variability, or dispersion, from the individual simulation data values to the mean.

-

Standard error of the mean (SE) measures how far the simulation mean (average) is likely to be from the “true" mean. The "true" mean would reflect a infinite number of model iterations. As we we run more iterations, our mean will approach the "true" mean.

Confidence Interval vs. Prediction Interval

-

Both intervals provide a range within which we can expect the "true" mean based on a defined confidence level. Typically researchers use a 95% confidence level as sufficient for stability. A 95% confidence level represents a range around the simulation mean ±1.96*SE.

-

Confidence intervals provide a range for mean values from observed clinical data.

-

Prediction intervals provide a range for mean values from model-generated simulation output data.

ICER Prediction Intervals

SD, SE and Prediction Intervals values can be calculated directly from simulation results for primary model outputs like cost and effectiveness. However, establishing variance and prediction intervals for Incremental Cost-Effectiveness Ratio (ICER) is complicated by the fact that ICER is a ratio of model outputs. This ratio can be very unstable (particularly when incremental effectiveness is close to or crosses zero). The article below provides a detailed description of the derivation of ICER Prediction Intervals based on incremental net benefits (INMB).