34.12 Assigning onetime costs and utilities

There are a number of different situations which may require assigning a onetime reward in a Markov model, rather than an incremental reward for each cycle spent in a particular state.

Prior costs

In some models, it is necessary to account for costs, utilities, or life expectancy that occurred prior to the Markov process. Consider, for example, a tree which deals with the uncertainties associated with a particular treatment. In this model, a Markov process will be encountered only if a particular event occurs. In the standard tree structure, costs are incorporated into a payoff formula at terminal nodes. In the scenario including the Markov model, though, these costs must also be accounted for in Markov rewards.

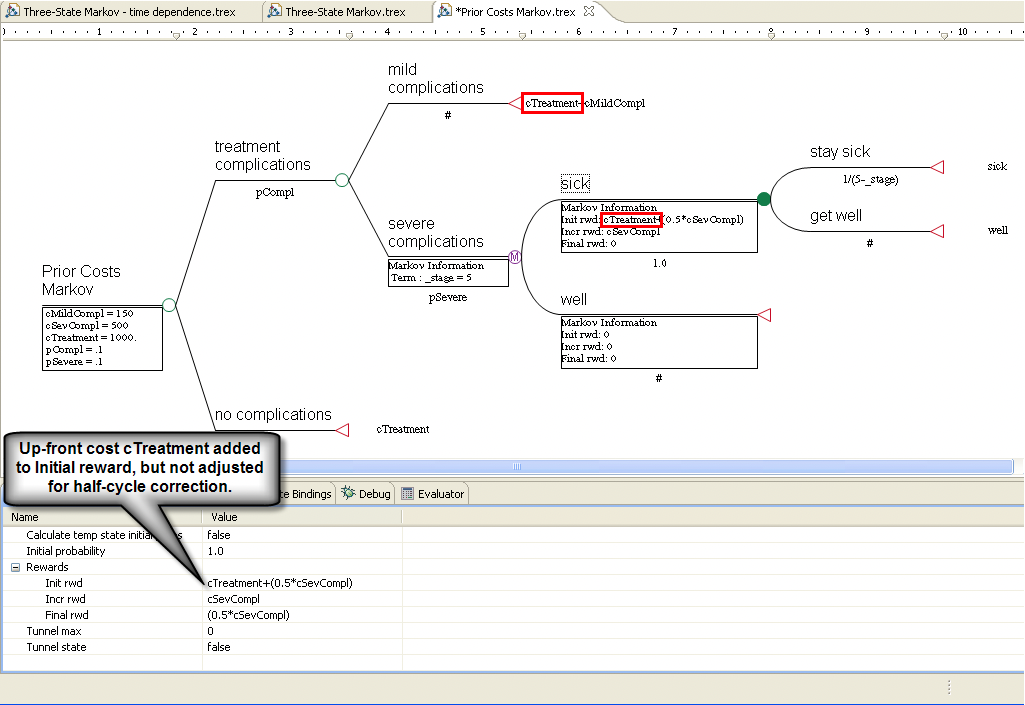

Typically, prior value expressions should be entered in the initial state reward of all states with a nonzero initial probability. This would ensure, for example, that all members of the cohort receive the prior costs. In a cost-effectiveness model (or any model with multiple attributes) be sure to use the appropriate reward set. Cost-effectiveness Markov models are discussed later in this chapter. If your model also uses the half-cycle correction (see above), the initial reward expressions must combine the prior values and the half reward.

To include prior costs in a Markov model:

-

Create and define a variable or expression that represents all costs accumulated before the Markov process.

-

For each state with a nonzero initial probability, update the initial reward expression to add the prior costs expression, remembering to keep the half-cycle correction if needed.

The Health Care tutorial example tree "Prior Costs Markov" is shown below.

Transition rewards

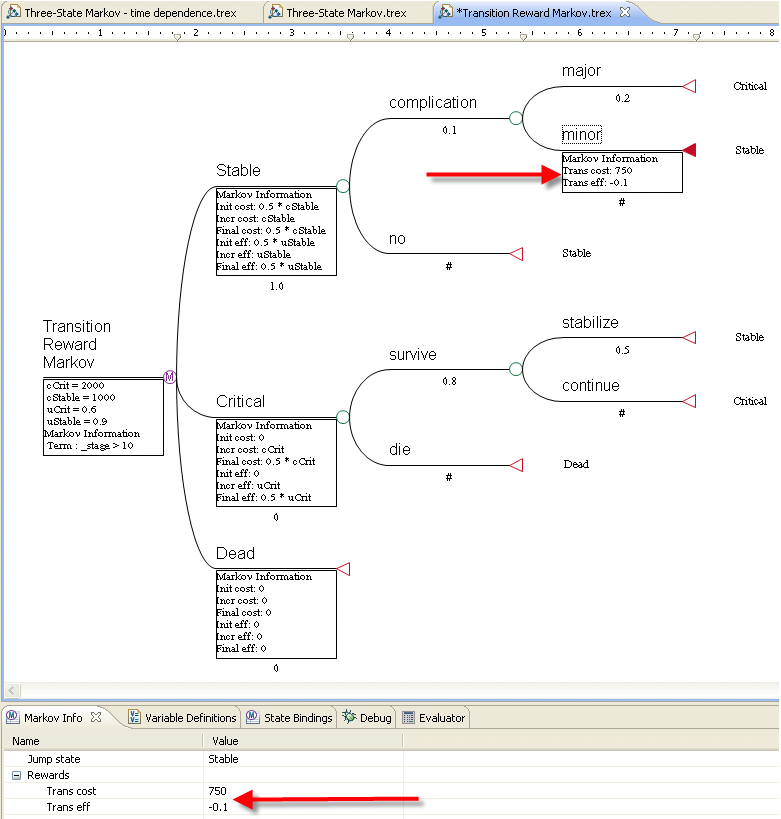

In some models, you may need to account for a cost or disutility associated with a transient event rather than a state. In many such cases, a transition reward can be used. Transition rewards can be assigned at any node to the right of the Markov state nodes (not just the actual transition nodes).

For instance, a onetime cost may be associated with admission as an inpatient. This cost is not incremental and should not be accumulated in each interval spent in the hospital. Nor can the cost be assigned using an initial state reward if the admission event is not just an initial, cycle 0 event (initial rewards are only assigned when _stage = 0).

Another example might be a relatively minor complication event during treatment. Although the complication is not a state itself, and may have no effect on state transition, it may have costs and/or disutilities associated with it.

In the Health Care tutorial example tree "Transition Reward Markov", transition rewards are specified both for costs (in reward set #1) and for effectiveness (in reward set #2).

To assign a transition reward:

-

Select the node where the event occurs, to the right of a Markov state.

-

Choose Views > Markov from the toolbar.

-

Enter values in one or more of the fields Rewards > Trans <Reward Type> in the Markov View.

If the Markov information display preference is turned on, transition reward expressions are shown below the branch.

Notes on transition rewards:

-

Transition rewards are not accounted for separately from state rewards, that is they become part of the total cost of effectiveness value. You can see where they are accumulated in the Cohort Analysis report.

-

Transition rewards are associated with the cycle in which they occur.

-

In cohort analysis reports, transition rewards are not associated with the state in which they occur; instead, they are divided among the Markov states to which the transition may lead based on the relative transition probabilities.

-

Like state rewards, transition rewards are added to the net reward. Thus, transition rewards should be entered using the appropriate sign, positive or negative. For example, transition costs are normally entered as positive numbers, while transition disutilities are normally negative numbers.